Expanding capabilities at the edge

Here at Ghost, we're building layered defenses that our customers can leverage at different parts of their application, API, and cloud stacks. As such, we have tight integrations with a number of technology providers at these different layers - from workload orchestration platforms like Kubernetes, to cloud providers like AWS, Azure and Google Cloud, to edge providers like Cloudflare and Akamai.

Cloudflare in particular is an interesting integration partner because of their recent advances in pushing more compute and storage capabilities to the edge. You may remember in the early days of AWS that the first services they launched were SQS (queues), S3 (storage), and EC2 (compute) - the building blocks of modern applications and APIs. Cloudflare has had Workers (compute) since 2017, but released KV (storage) in 2020 and R2 (storage) in 2021. If you take into account the recently released Queues (Nov. 2022) and D1 (relational database), its easy to see that Cloudflare has compelling capabilities that offer an interesting deployment option to challenge that of the traditional cloud providers.

With this in mind, we thought we'd explore extending some of our application and API security goals out to the Cloudflare edge. Even though Cloudflare has some of their own security capabilities in the form of DDoS mitigation and WAF functions, what what we are aiming to do is leverage their capabilities for a more specialized and dynamic use case.

Reducing noise

One of our guiding principals at Ghost is reducing "toil" for our customers - reducing the amount of tedious, and often repetitive work that doesn't yield meaningful results. One way to do this is to reduce the amount of noise our customers see in the form of alerts, incidents, issues, and events. Reducing noise is an important way to increase signal.

It's common knowledge that a vulnerable system exposed to the Internet will last mere minutes before being compromised, exploited, or otherwise taken advantage of. Depending on the underlying operating system and the resources it provides for attacker (e.g. access to bandwidth, compute resources, or sensitive data), constant Internet-wide mass scanning will alert attackers to its presence, and it is simply a matter of when - not if - the system is compromised.

A recent conference talk by GreyNoise offered emprical data to support the idea that simply reducing the visibility of vulnerable systems exposed to the Internet increased the mean time to compromise from 19 minutes to over 4 days. This exploration looks at ways we can use Cloudflare Workers and KV to reliably detect undesired sources and immediately take action to reduce their ability to connect to our applications.

Approach

The general idea for filtering out some of the noise associated with Internet-wide scanning is start with some notion of what this known-bad scanning activity looks like. For that, we turn to the excellent Nuclei project from Project Discovery. The Nuclei community maintains a large collection of templates that security researchers use to discover and test specific vulnerabilities and exploits. If we use these templates as a sample of known-bad scanning traffic, we can block the originators of that traffic at our edge and automatically block any further noise they make - with the added benefit of blocking any actual attack traffic they may attempt as well.

A quick look at Nuclei templates gives us some ideas for common attack paths to look for:

/wp-admin

/wp-config

/etc/passwd

/etc/shadow

/.env

../../

cgi-bin

.php

.cgi

interact.sh

win.ini

WEB-INF

<script>

%3Cscript%3C

We include .php here because 36% of the ~1,400 Nuclei templates involve some sort of PHP-related payload. If you use PHP in your environment, you may need to be more specific here to avoid blocking legitimate traffic.

If we treat requests for these paths as high confidence indicators of malicious scanning activity, we can safely block the sources of those requests and in theory reduce the noise we see - and have to triage - across our organization.

Benefits

We think of this type of approach as a form of canary detection, rather than a content blocking strategy. That is to say, we aren't simply trying to block malicious traffic based on its direct payload or content. Reconnaissance is necessary step in the attack process. This reconnaissance itself produces signal. By detecting requests to a known-bad paths, we can block the attacker source for a predetermined length of time - and potentially extend the block if the attacker continues to attempt to send us traffic.

Benefits of this type of approach include reducing noise as far upstream as possible (i.e. at the edge) and leveraging bad traffic directed at one application or API to protect other applications and APIs on our domain. Further, even though we don't expect to block all bad traffic, we can increase the attacking cost of bad actors by blocking or slowing down their automated activity.

How it works

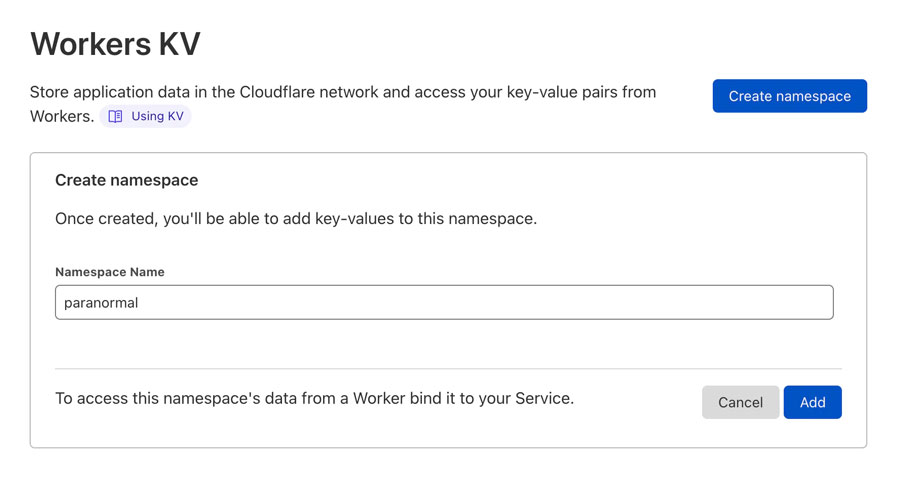

The architecture for a proof-of-concept is fairly simple. We'll deploy a small script to a Cloudflare Worker to inspect requests and write IP blocks to Cloudflare KV with a pre-configured TTL to auto-expire the blocks. We'll also extend the block if an attacker continues to make requests after we trigger a block on their IP address. We can configure the Worker to run on all requests to our domain, only a sub-domain, or any path or path pattern, giving us a lot of flexibility in how we deploy our canary blocks.

We should probably implement several different modes of operation to facilitate a gradual, low-risk rollout strategy. We'll go with these modes to start:

- Read-only: just log request to bad paths, don’t block anything

- Normal: one bad path request results in a block of the source IP address, expires in 1 hour

- Paranoid: one bad path request results in a block of the source IP address, every subsequent path hit extends the block by 3 hours

Now, let's build it...

Build it

name = "paranormal"

compatibility_date = "2022-11-09"

account_id = "7c79-example-e12120e65775bd2ecb3"

kv_namespaces = [

{

binding = "PARANORMAL",

id = "0e36-example-e4e9569d926b62c1cc",

preview_id = "165d-example-5cdf3e22b4c7d3a5dd"

}

]

[

"/wp-admin",

"/wp-config",

"/etc/passwd",

"/etc/shadow",

"/.env",

"../../",

"cgi-bin",

".php",

".cgi",

"interact.sh",

"win.ini",

"WEB-INF",

"<script>",

"%3Cscript%3E"

]

127.0.0.1,::1,8.8.8.8

import BAD_PATHS from './paths.json'

import NEVER_BLOCK from './allowed_ips.json'

/**

* Handle a request

* @param {Request} request

*/

async function handleRequest(request, ts) {

const url = new URL(request.url)

const blockStatus = 403 // HTTP status code to return on block (e.g. 401, 403, 404)

const TTL = 60 // expiration TTL in seconds (minimum 60)

const IP = request.headers.get('CF-Connecting-IP')

const key = IP

const path = url.pathname

const value = `${ts}-${path}`

/**

* set block flags

*/

let blocked = await PARANORMAL.get(key)

let willBlock = BAD_PATHS.includes(path)

const allowed = NEVER_BLOCK.includes(IP)

/**

* allow list by IP

*/

if (allowed) {

blocked = false

willBlock = false

console.log('[ok] request allowed from IP:', IP, 'on:', path)

}

/**

* already blocked, regardless of path

*/

if (blocked) {

console.log('[blk] request blocked from IP:', IP, 'on:', path)

// update TTL for already blocked IP

await PARANORMAL.put(key, value, { expirationTtl: TTL })

console.log('[blk] updating block expiration for IP:', IP, 'on:', path)

return new Response(null, { status: blockStatus })

}

/**

* will be blocked

*/

if (willBlock) {

// client is request a bad path, block for TTL seconds

await PARANORMAL.put(key, value, { expirationTtl: TTL })

console.log('[blk] creating block for IP:', IP, 'on:', path)

return new Response(null, { status: blockStatus })

}

/**

* if we got this far, request is OK

*/

console.log('[ok] request ok from IP:', IP, 'on:', path)

const response = await fetch(request)

return response

}

addEventListener('fetch', (event) => {

try {

let ts = Date.now()

event.respondWith(handleRequest(event.request, ts))

} catch (e) {

console.log('error', e)

}

})

wrangler publish worker.js

⛅️ wrangler 2.1.15 (update available 2.6.2)

------------------------------------------------------

Your worker has access to the following bindings:

- KV Namespaces:

- PARANORMAL: 0e36-example-e4e9569d926b62c1cc

Total Upload: 1.99 KiB / gzip: 0.80 KiB

Uploaded paranormal (0.67 sec)

Published paranormal (0.22 sec)

https://paranormal.acme.workers.dev

Test it out

$ curl https://api.mycorp.com/auth/status

{"status":"ok"}

$ curl https://api.mycorp.com/auth/status

{"status":403}

Pros and cons

Pros

- High confidence signal of bad requests - we can be confident that no legitimate requests would ever be made for paths such as /.env, /etc/passed, ../../, and <script>.

- Highly read performant - Cloudflare KV is highly read-optimized

- Broad coverage - we could get broad coverage across an entire domain with a single deployment

- Cost effective - Worker requests and KV reads/writes are relatively inexpensive

Cons

- Risky to deploy - if we trigger on an incorrect block path, we could block a lot of traffic

- IP-based - if we trigger a block on a NATed IP address, we could inadvertently block many innocent users

- Write limited - while Cloudflare KV is heavily read-optimized, the opposite is true of writes (both in terms of performance and cost)

- Cloudflare KV is eventually consistent - there is a slight delay to propagate KV writes out to all Cloudflare edge locations, which limits the real-time nature of our block methodology

What's next?

As a limited proof-of-concept, we were able to prove some basic functionality, However, there are a number of ways we might want to improve our approach.

- More precise detections

- Profile/fingerprint clients to make more nuanced decisions

- Only trigger on unsuccessful (i.e. non-20x) responses to minimize false positives

- Auto-block other bad IP addresses or IOCs from external threat feeds

Workers for platforms

With Cloudflare's recently released Workers for Platforms, there should be even more interesting opportunities to expand and extend these capabilities. Stay tuned for more!